Relax…this isn’t a Safety I, Safety II, Safety III, or even a Safety IV comparison/spat…but the safety engineering profession is on the cusp of a paradigm shift (in fact it’s already begun); owing to the increasing use of autonomous systems in safety-related applications. No longer can one maintain the neck-below-the-sand-level posture when considering the safety of autonomous systems – now that the human operator has been taken out of the loop.

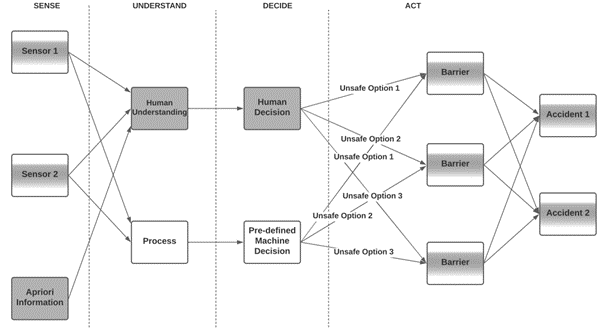

Previously, a human operator has been relied upon for maintaining system safety. Figure 1 below shows the accident model for a traditional system where a human operator has control over decisions that must be made (shaded grey). Even systems that use software to implement decisions do this in collaboration with a human (who gets the same information from the sensor as the computer), and any decisions ‘made’ by the computer are human-based decisions anyway (as a human engineer has pre-coded fixed responses to a set of defined criteria).

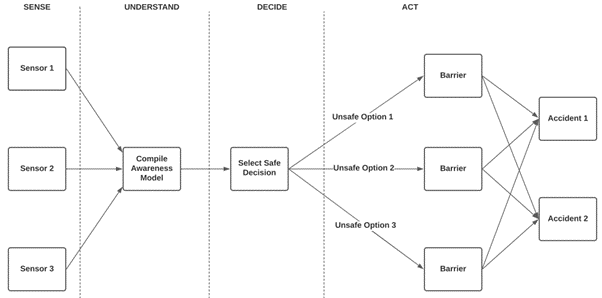

Contrast this with autonomous systems, where the human has been removed from the safety loop, and we rely instead on the autonomous system to make decisions, and to react safely to all unanticipated events the operating environment may realise (including edge cases) (Figure 2).

This gives rise to new hazards, causes and failure paths, and whereas such human-controlled decisions have been previously treated as a human factors issue, they are now a technical one.

To be clear, we are not (yet?) saying that extant safety engineering activities/analyses are redundant, or that new ones are needed necessarily, but they may need enhancing. As the Manifesto for Reality-Based Safety Science states…(I’m paraphrasing)…no one needs a YARP (yet another acronym paper) that is ‘successfully’ applied to a (fabricated) case study – and that is far from our intent.

The traditional causal path is certainly being challenged, however. Many questions and challenges have arisen, and as an illustrative example, ask yourself how confident you are that your existing safety engineering tool kit will identify and mitigate:

- Errors in a detection algorithm

- Errors in a classification algorithm

- Errors in decisions taken by an autonomous system

- Hallucinations of your autonomous system (just ask Elon…)?

Could your current safety engineering tool kit elicit safety requirements in mitigation of the following fault/failure conditions:

- Sensor ‘failures’ through dirt or insects obscuring sensors

- Sensor obscuration through inclement weather

- Sensor blinding through low sun/bright lighting

- Inability to detect an object owing to its texture

- Inability to detect an object as it comprises similar colours to its immediate environment

- Inability to detect an object due to its translucent appearance?

…how confident can you be that the ‘AND’ gates in your Fault Tree really are that? Are your HAZOP guidewords still complete, and correct? Do the terms ‘redundant’ or ‘diverse’ need updating?

Along with one of my colleagues, we’re currently researching how existing safety engineering techniques can be adapted to assure the safe decision-making of autonomous systems, and investigating whether new techniques may be needed. We hope to share news on our first published paper on this very soon. Watch this space…